In <48 hours, develop a UX Plan to evaluate a new site homepage on a 3 month timeline assuming a team including other UX researchers, designers and developers.

UX Project Plan

Hannah Glazebrook 10/15/2019

Problems:

P1. Users of the homepage are unable to access the site without creating an account. This may be perceived as annoying, and lead to users leaving the site before accessing the content the site has to offer.

P2. Users are not spending as much time on the homepage as the customer would like.

Research Questions:

R1. Who are the users?

R2. What do they want from the site?

R3. Are their needs being met by the site?

R4. What are their biggest concerns and challenges when using the site?

R5. Can we tailor the homepage to the user’s interests?

R6. What information/content has the broadest appeal to users?

R7. Is there a visual aesthetic for the homepage that appeals to the most users/is the most versatile?

R8. How do the new designs compare to the existing homepage in aesthetics, usability, understandability, accessibility, value, and whether the users’ goals for the site are being met?

R9. What is the value of the site to users?

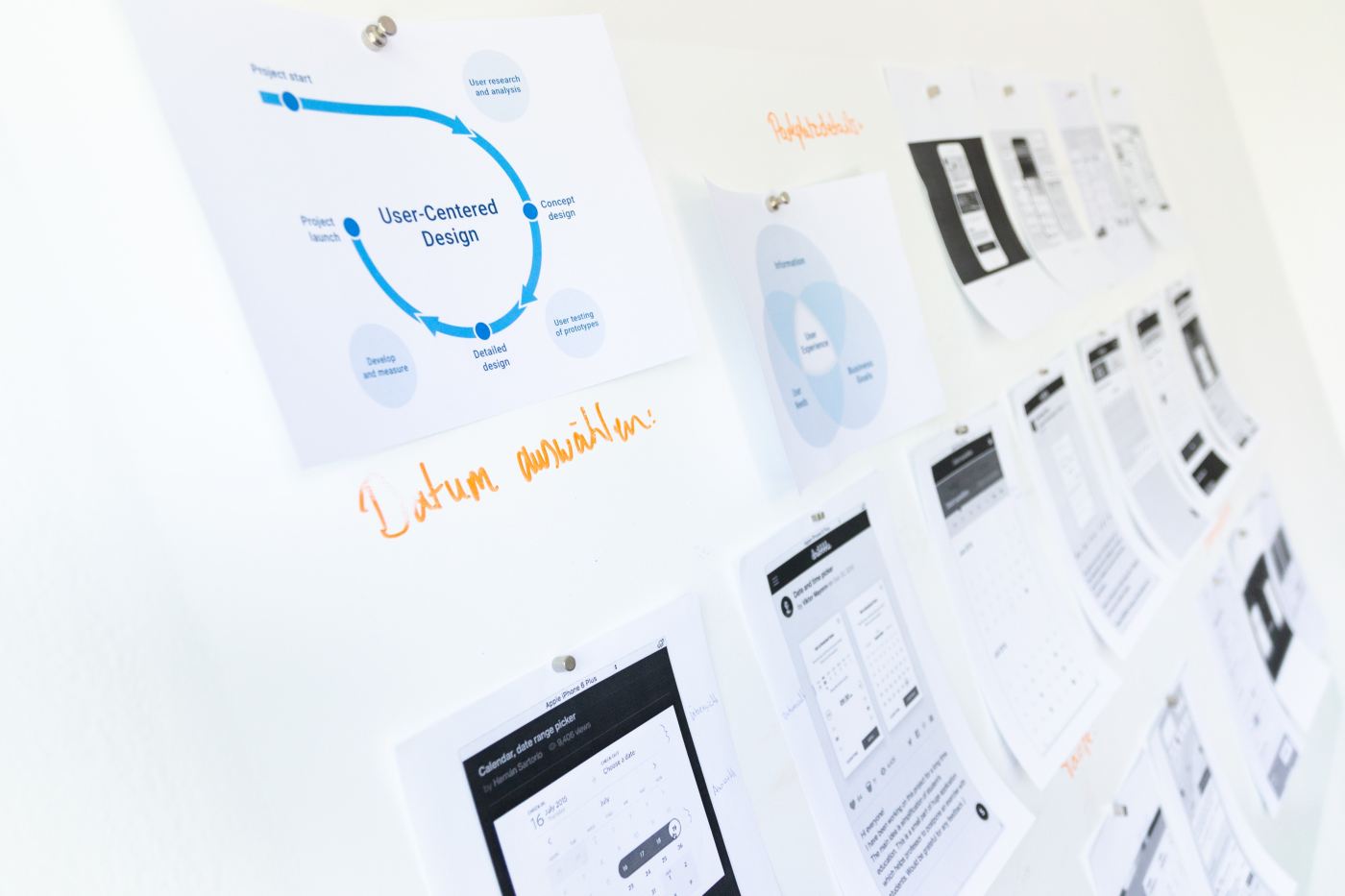

Process

Step 1: Stakeholder requirements gathering

(~1 day) | Addresses R1-R9, might add RQs

Meet with stakeholders from the user research, design, development, project management, marketing and business teams. Identify key decision-makers and hold a project requirements workshop (2-3 hours) to work as a team and identify/solidify:

-The scope of the redesign effort

-The key problems we are trying to solve

-Our problem statement

-Definition of done/minimum viable product

-Success metrics

-Our key user groups (used to identify participants for surveys and usability tests, and to create personas)

Step 2: Assess the severity of the problems, and determine a baseline state for future comparison.

| Activity | Purpose | Estimated Time to Completion (weeks) | Addresses which RQs? |

| 2A. Analyze current site metrics (on the existing site) | Determine baseline statistics for site traffic, bounce rate, links accessed, most requested searches, time on page, time on site and user descriptions (location, time of day, device, browser, etc). | 1-2 | R1, R5, R6, R8 |

| 2B. Perform heuristic evaluation on the existing site | Determine if there are usability, accessibility or design concerns to the original site to verify that they will be addressed in the re-design (determine % compliance) | 1-2 | R3, R4, R7, R8 |

| 2C. Perform accessibility evaluation (including section 508) on the existing site | Determine if there are accessibility concerns to the original site to verify that they will be addressed in the re-design (determine % compliance) | 1-2 | R3, R4, R7, R8 |

| 2D. Perform competitive analysis on the existing site | Look for trends in the current market and identify best practices in social media platforms to inform a better design | 1-2 | R2, R5, R6, R7, R8 |

Step 3: Engage with users to collect real data

3A. User Survey (used pre and post design update)

(~4 weeks, but runs concurrently with other efforts) | Addresses R1-R9

Participants: Recruited randomly from the existing the site homepage.

1/5 users will be prompted with a pop-up that appears when leaving the site. – survey would run for 2 weeks

Question: How would you rate your experience with the site today?

Responses: (smiley face) (frowny face) OR (thumbs up) (thumbs down)

(would meet with team to discuss optimal responses)

The prompt would then ask users who completed the survey: “Thanks for the feedback! Would you be willing to answer a few more questions to help us improve the site experience?”

The survey would continue to offer questions, 2 at a time, until the survey was completed. Users would be able to click out of the survey and quit at any time.

| Question | Response Options | Analysis | RQs Addressed |

| 1. How would you rate the ease of use of the site? | 5- Very easy to use, 4- easy to use, 3- neutral, 2- difficult to use, 1-very difficult to use | Mean ease of use, median ease of use, percentage of total for each rating | R3, R4, R8 |

| 2. Did you find what you were looking for on the site? | Yes, No | % of total responses for each rating | R2, R3, R4 |

| 3. How would you rate the look and feel of the site? | 5-Very appealing, 4-somewhat appealing, 3-neutral, 2-somewhat unappealing, 1-very unappealing | mean and median scores, percentage of total for each rating | R7, R8 |

| 4. What did you come to the site to find today? | Open response | qualitative data analysis (grounded theory or affinity diagramming) to identify recurring themes | R2, R5, R6 |

| 5. Which of these categories most appeal to you? | Offer site categories shown at sign-up | Percentage of users that clicked on each category, ranked by most preferred | R2, R5, R6 |

| 6. What do you find most valuable about the site? | Open response | qualitative data analysis (grounded theory or affinity diagramming) to identify recurring themes | R9 |

(Note: This survey format increases the number of responses, and allows us to get information from more users than usually participate in longer surveys. There is a risk of getting more incomplete data from each user, but allows us to survey a wider range of participants. Additionally, because we are not comparing user-user, and will be analyzing each question independently, this should not impact data analysis.)

3B. User Interviews/ User Requirements Gathering

(~2 weeks) | Addresses R1-R9

Recruiting: Travel to local areas and set up a booth to gather feedback from users (malls, food halls, shopping centers, etc). Ideally 10 users. Offer gift card compensation. Additionally, recruit via social media for users to interview over Skype or Google Hangouts. Mail compensation.

Process: Talk informally with users while gathering responses to questions like:

Do you use social media?

Do you use the site?

Why or why not?

What do you think of the site?

What kinds of information do you access on the site?

What kinds of information do you access on social media?

What are your favorite things about social media?

What are your least favorite things about social media?

Data Analysis: Grounded theory or affinity diagramming to pull out trends and high level findings

Step 4: Generate Artifacts for Designers

(~1-2 weeks, but runs concurrently with other efforts)

Work with design team to create personas, storyboards, and other graphics to use regularly when making design decisions based on data found in surveys, interviews and site metrics/analytics.

Step 5: Test New Designs with UX SMEs

(~ 1-2 weeks, but runs concurrently with other efforts) | Addresses R3, R4, R7, R8

UX Researchers evaluate new designs from a UX perspective, evaluating based on heuristics and accessibility best practices to identify changes. Refine the design before testing with users. Refine designs in an iterative process.

Step 6: Test New Designs with Users

(~ 1-2 weeks, but runs concurrently with other efforts) | Addresses R3, R4, R7, R8

Recruiting: Advertise on the site, social media for remote usability tests. Utilize same process as step 3B for in-person usability tests.

Identify tasks for usability testing based on requirements identified in the stakeholder workshops, user interviews, and survey data.

Remote usability tests can be conducted through usertesting.com or through Skype using screenshare capabilities.

Offer compensation (~$10-20) in the form of gift cards which can be mailed to participants after participation, or given to users for in-person interviews. Allow for both in-person and remote participation, especially to prevent excluding users with disabilities and limited mobility.

Possible tasks: Find information on a specific news story, Identify an interesting story, sign up for an account, look for a specific tweet you’ve been hearing about.

Analysis/Findings: Perform tests iteratively to improve designs. Run 2-3 tests, refine, 2-3 more tests, refine, 2-3 more tests, refine. Aim for 8-12 usability tests. Use tests to refine down to 2 main design choices.

Step 7: UX Reviews on 2 Final Designs

(~ 1-2 weeks, but runs concurrently with other efforts) | Addresses R3, R4, R7, R8

Perform heuristic and accessibility evaluations on the 2 final designs. Resolve issues and validate that both designs have equal or greater compliance to the existing site.

Step 8: A/B Testing on 2 Final Designs

(~ 1-2 weeks, but runs concurrently with other efforts) | Addresses R3, R4, R7, R8

Run A/B test on live site, assigning 50% of users to design A and the other 50% to design B. Measure bounce-rate, site traffic, time on page, time on site. Compare results of each site A and B as well as to the original site.

Prompt 1/4 users with survey from Step 3A. Compare results of each site A and B as well as to the original site to ensure that the design has improved scores from the original design.

Step 9: Implement a design that is validated, and make updates based on user feedback

Use results to determine new design to implement. Use results from surveys, testing and analytics to demonstrate that the new design is better than the old design on metrics of: look and feel, usability, meeting user needs, accessibility and value.

Step 10: Continual Process Improvement and Monitoring of Site Metrics

Implement a feedback button on the site homepage (before login) to allow users to report issues with the site.

Monitor site analytics and traffic to identify dips and spikes in traffic, high bounce rates, frequently used search terms, as these might identify possibilities for improvement.

Timeline for Process

| Week | Activities (teams involved) |

| 1 | S1: Stakeholder requirements meeting (ALL) S2A: ES (existing site) Site metrics analysis (Research) S2B: ES Heuristic evaluation (Research) S2C: ES Accessibility evaluation (Research) S2D: Competitive Analysis (Research and Design) |

| 2 | S1: Stakeholder requirements meeting (ALL) S2A: ES (existing site) Site metrics analysis (Research) S2B: ES Heuristic evaluation (Research) S2C: ES Accessibility evaluation (Research) S2D: Competitive Analysis (Research and Design) S3A: User Survey Design (Research and Development (to implement popup)) |

| 3 | S3A: User Survey Launch + Data Collection (Research and Development) S3B: User Interviews/User Requirements Gathering (Research) |

| 4 | S3A: User Survey Data Collection S3B: User Interviews/User Requirements Gathering (Research) |

| 5-6 | S4: Generate Artifacts (Research and Design) S5: Test New Designs with UX SMEs (Research and Design) |

| 7-9 | S6:Iterative Usability Testing and Refinement (Research, Design, Development) |

| 10-11 | S7: UX Reviews on Final 2 Designs (Research) S8: A/B Testing on 2 Final Designs (Research, Development) |

| 12 | S9: Implementation of Design (Development) S10: Implementation of Feedback tool (Development) |

| Ongoing | S10: Monitor site analytics and feedback tool for improvements (Research, Development) |